Pawsey Technical Newsletter

The Pawsey Technical Newsletter brings you the latest information on what is happening to Pawsey’s systems in terms of hardware and software changes, it outlines best practices on how to use supercomputing systems and how to deal with incidents that may occur with these very complex machines.

Based on your feedback, the technical newsletter will be populated with regular updates from Pawsey to researchers with a 2023 allocation on Setonix.

In addition to this, a monthly update will also be posted here, which offers the chance for Pawsey technical staff to communicate what Pawsey staff are planning to do for the month ahead.

Please feel free to provide feedback via our helpdesk and we will develop updates in response to your feedback.

05 June 2024

June 2024 Software Update

During June 2024 maintenance, Setonix has gone through several software and hardware updates. The compute nodes on Setonix are updated to the new Cray Programming Environment (CPE) 23.09 which includes newer MPI libraries and a newer Cray compiler version (16.0.1). This update to CPE will result in better performance of the supercomputer but may also imply certain modifications and updates in the working environment to users. For example, changes in programming environment in some cases may imply the need of recompilation of user’s codes.

This is the summary of changes:

- The new version of the software stack is 2024.05 which is available by default. It sits alongside version 2023.08 that has been available on Setonix before the upgrade. Researchers have the option to use the older 2023.08 deployment.

- A package manager, spack, has been upgraded from version 0.19.0 to 0.21.0. The new version brings over 7400 software recipes, bug fixes, more supported applications and libraries, and further support for AMD GPUs.

- Several newer versions of ROCm up to 5.7.3 are now available.

Users may refer to the following page which is created to consolidate in one place all the important points, recommended actions, and changes https://pawsey.atlassian.net/wiki/spaces/US/pages/190087173/June+2024+Software+Update+-+Important+Information.

23 May 2024

AI Systems and Security

There are already a number of research projects taking advantage of the capabilities that Setonix offers for artificial intelligence (AI) and machine learning (ML). You may be aware that the Australian Cyber Security Centre, together with other international government cyber organisations, have recently issued guidelines for deploying AI systems securely (https://www.cyber.gov.au/resources-business-and-government/governance-and-user-education/artificial-intelligence/deploying-ai-systems-securely). Although these are aimed at organisations or businesses deploying or operating AI systems, much of the guidance is applicable to the research and development stages too.

First of all – where has the software you’re using come from? Have you developed it all in-house or with known trusted collaborators? Does it do what you think it does? We are already seeing complex supply-chain attacks on software, and AI tools won’t be exempt from these types of slow careful attacks. Think about where your training data has come from: Is it ‘tainted’ or biased in ways you’re not expecting? Will that influence your models?.

The Pawsey systems teams and upstream vendors strive to provide a secure platform on which researchers can work, however this does not absolve the end-user from maintaining best practices of revision control, backups (no filesystems at Pawsey are backed up) and writing hardened (and bug free!) code.

05 October 2023

Important update concerning Acacia and the mc client

This update is relevant for all researchers using the mc client to upload data to Acacia.

We have seen that, for file sizes above 200GB, the mc client can fail without returning a proper error code. For files at or below 200GB, mc appears to function properly. We have not observed this problem in either awscli or rclone for file sizes up to 1TB.

Going forwards, we are strongly recommending that you do not use the mc client.

For transferring files, we will be recommending the use of rclone due to better overall scalability, fault behaviour, and support.

The Acacia – User Guide has been updated to reflect this, with particular attention to:

- how to initially configure rclone,

- understanding the command syntax via some of the common use cases,

- advanced policy features not being supported by rclone – with either awscli or pshell used instead, if required.

Other changes to the documentation include some additional features that rclone provides, such as sync-style transfers.

Please contact us (help@pawsey.org.au) if you still require assistance after reading the Acacia – User Guide.

05 September 2023

During Setonix September maintenance (Sep, 5), the cluster has gone through several software and hardware updates.

After the maintenance the compute nodes on Setonix have the new Cray Programming Environment (CPE) 23.03. This update to CPE will result in better performance of the supercomputer but will also imply a series of modifications and updates in the whole working environment to users. For example, changes in programming environment that would imply the need of recompilation of software stack delivered by Pawsey and also of user’s codes; or changes in the scheduler version and configuration that would imply the need of modification of user’s slurm job scripts.

We have updated our documentation pages with the important points that researchers will need to pay attention, together with the recommended actions or changes to take. We strongly recommend users to check each of these pages in order to be aware of the actions they should take relative to changes in the configuration of Setonix.

Detailed changes, actions and recommendations

10 May 2023

Using Conda? Make your life easier with Mamba

Although we recommend using containers instead of Conda where possible, sometimes you just need to use Conda. Mamba can improve your experience with Conda installs.

Why Mamba?

- It’s incredibly fast for downloads

- You can combine it with Conda, mixing and matching the two systems seamlessly

- If you know how to use Conda, you know how to use Mamba

- It may help alleviate the issue with too many small files being created during Conda and clogging up the /software partition.

6 April 2023

Important Information

The /scratch purge policy is scheduled to commence on the 15th April 2023 (ie. Any file that has not be accessed since the 15th March 2023 will be subject to removal).

Key activities this week

- HPE updated the slingshot fabric configuration for Setonix this week.

- 128 Phase 2 CPU nodes added to the work partition, bring us to a total of 1388 CPU nodes available for researchers.

- 48 GPU Nodes are ready for early adopters to test and a further 64 GPU nodes should be online before Easter.

Activities planned for next week

- Early adopters will have access to the Setonix GPU nodes.

- final planning for the CSM update on the Test and Development System.

Look ahead

| Date | Activity | Impact on Setonix availability |

|---|---|---|

| April 2023 | TDS Neo update, and CSM update

Completing this upgrade to the TDS allows HPE and Pawsey to test the upgrade process before performing the same upgrade on Setonix. |

There will be no impact on the availability of Setonix. |

| May 2023 | Setonix Neo update, slingshot update and CSM update | HPE advise that a multi-day outage may be required on Setonix. Further details have been requested to facilitate better defining this outage. |

| June 2023 | Setonix acceptance testing | HPE advise that a number of small (2 – 6 hour) outages will be required on Setonix over a period of 2 – 4 weeks. |

31 March 2023

Dear researchers,

I wrote to you last week to let you know that the HPE’s processes to bring Setonix Phase 2 online for Pawsey were taking longer than planned and that Pawsey has urgently requested additional resources from HPE to assist with triaging and site configuration tasks.

The positive impact delivered by the increase in US-based resources to assist with triaging and site based resources to support on-site configuration activities has been felt this week and at the time of writing this update (Thursday evening) 70% of the total Setonix CPU capability is available to researchers. The remaining CPU nodes will be made available to researchers next week.

The current forecast is for the GPU’s to be made available to early adopters in April 2023 and for all those with a 2023 GPU allocation to be given access to the GPU’s in May 2023. Keep an eye out for training and support documentation that will become available in May to support the migration to the Setonix GPU’s.

Kind regards,

Stacy Tyson

Important Information

The /scratch purge policy is scheduled to commence on the 15th April 2023 (ie. Any file that has not be accessed since the 15th March 2023 will be subject to removal).

Key activities this week

- 608 Phase 2 CPU nodes have been added to the work partition.

- 32 Phase 2 GPU nodes have been handed to the services team for testing and preparation for early adopters later in April.

Activities planned for next week

- HPE to focus on continuing to boot and handover the Phase 2 nodes.

- HPE require an outage next week (4th April) to update the Slingshot fabric configuration and remediate faulty slingshot cables.

Look Ahead

| Date | Activity | Impact on Setonix availability |

|---|---|---|

| April 2023 | TDS Neo 6.2 update, and update to CSM 1.3.1

Completing this upgrade to the TDS allows HPE and Pawsey to test the upgrade process before performing the same upgrade on Setonix. |

There will be no impact on the availability of Setonix. |

| May 2023 | Setonix Neo 6.2 update, slingshot update and update to CSM 1.3.1 | HPE advise that a multi-day outage may be required on Setonix. Further details have been requested to facilitate better defining this outage. |

| June 2023 | Setonix acceptance testing | HPE advise that a number of small (2 – 6 hour) outages will be required on Setonix over a period of 2 – 4 weeks. |

24 March 2023

Setonix

Dear researchers,

I acknowledge that delays in the availability of our new HPE Cray system, Setonix, during this first quarter of 2023 may have impacted your research program and I apologise if this has had a negative impact.

HPE’s processes to bring Setonix Phase 2 online for Pawsey are taking longer than planned. Each node needs to be booted, error messages need to be triaged and then local HPE personnel perform the required hardware changes. HPE are experiencing more hardware issues than expected and addressing the stability issues experienced last week has also contributed to delays.

Pawsey has requested additional resources from HPE; three additional US-based staff members are now assisting with triaging issues and three further HPE personnel will arrive at Pawsey next week to support hardware configuration on site. Phase 1 is now back online, and HPE will hand over Phase 2 nodes progressively to Pawsey (one chassis at a time) so that we can bring them online for researchers as quickly as possible.

I will provide further reports on our progress as we reach key milestones in coming weeks.

Kind regards,

Stacy Tyson

Important Information

The /scratch purge policy is scheduled to commence on the 15th April 2023 (ie. Any file that has not be accessed since the 15th March 2023 which be subject to removal).

Key activities this week

On Friday 17th March Setonix was taken offline by Pawsey and returned to HPE due to stability issues. HPE worked through the weekend to convert Setonix to use a TCP configuration. Setonix was returned to Pawsey and our researchers on Monday 20th March and is currently performing well.

HPE are progressively bringing up Phase 2 nodes. Pawsey staff will make the additional CPU nodes available to researchers as soon as they are released to Pawsey.

Activities planned for next week

HPE to focus on continuing to boot and handover the Phase 2 nodes.

Look Ahead

Due to the delays with HPE completing the deployment of the Phase 2 nodes, there may be some movement in the below dates. We will update these details weekly and will include any changes when we receive the information from HPE.

| Date | Activity | Impact on Setonix availability |

|---|---|---|

| April 2023 | TDS Neo 6.2 update, and update to CSM 1.3.1

Completing this upgrade to the TDS allows HPE and Pawsey to test the upgrade process before performing the same upgrade on Setonix. |

There will be no impact on the availability of Setonix. |

| May 2023 | Setonix Neo 6.2 update, slingshot update and update to CSM 1.3.1 | HPE advise that a 5 – 7 day outage will be required on Setonix. |

| June 2023 | Setonix acceptance testing | HPE advise that a number of small (2 – 6 hour) outages will be required on Setonix over a period of 2 – 4 weeks. |

17 March 2023

Setonix

Issues

A number of you have noticed issues accessing the scratch filesystem. This presents itself as a Lustre errors, from which the node does not recover. The issue is now affecting approximately half the nodes in Setonix. At this stage the errors appears to be the symptom of an issue in either the Slingshot fabric or the lustre configuration.

We have created a reservation on Setonix to allow the system to drain, and handed the system to HPE to resolve the issue.

At this stage we don’t have an ETA on when the issue has been resolved, but Pawsey has stressed that it wants Phase 1 and Phase 2 returned to researchers in a stable and supported configuration as soon as possible.

Any further updates will be posted here as we get them: https://status.pawsey.org.au/incidents/ld32gsxv37dw

We apologise for the impact this is having on your research.

Important Information

The /scratch purge policy is scheduled to commence on the 15th April 2023 (ie. Any file that has not be accessed since the 15th March 2023 which be subject to removal).

Key achievements this week

Setonix CPU nodes (phase 1) was returned to service on Monday at 2pm WST.

Planning for the test deployment of Neo 6.2, Slingshot 2.0.1, COS 2.4.103 and CSM 1.3.1 on the Test and Development System has commenced.

HPE are progressively bringing up Phase 2 nodes. This work will continue next week and once complete Pawsey will be able to release more CPU nodes for researcher use.

Activities planned for next week

HPE to focus on resolving the IO issue and returning Setonix to service.

Look Ahead

Early April 2023

-

TDS Neo 6.2 update, and update to CSM 1.3.1 (no impact on researchers)

May 2023

- Setonix Neo 6.2 update, slingshot update and update to CSM 1.3.1 (This will require ~ 5 day researcher outage)

June 2023

- Setonix acceptance testing (This will require a number of short outages that will be planned and announced prior to commencing the testing.)

13 March 2023

Known ongoing issues on Setonix

MPI jobs failing to run compute nodes shared with other jobs

MPI jobs running on compute nodes shared with other jobs have been observed failing at MPI_Init providing error messages similar to:

MPICH ERROR [Rank 0] [job id 957725.1] [Wed Feb 22 01:53:50 2023] [nid001012] - Abort(1616271) (rank 0 in comm 0): Fatal error in PMPI_Init: Other MPI error, error stack:MPIR_Init_thread(170).......:MPID_Init(501)..............:MPIDI_OFI_mpi_init_hook(814):create_endpoint(1359).......: OFI EP enable failed (ofi_init.c:1359:create_endpoint:Address already in use)aborting job:Fatal error in PMPI_Init: Other MPI error, error stack:MPIR_Init_thread(170).......:MPID_Init(501)..............:MPIDI_OFI_mpi_init_hook(814):create_endpoint(1359).......: OFI EP enable failed (ofi_init.c:1359:create_endpoint:Address already in use) |

This error is not properly a problem with MPI but with some components of the Slingshot network. For temporary workaround please refer to Known Issues on Setonix

Parallel IO within Containers

Currently there are issues running MPI-enabled software that makes use of parallel IO from within a container being run by the Singularity container engine. The error message seen will be similar to:

Assertion failed in file ../../../../src/mpi/romio/adio/ad_cray/ad_cray_adio_open.c at line 520: liblustreapi != NULL/opt/cray/pe/mpich/default/ofi/gnu/9.1/lib-abi-mpich/libmpi.so.12(MPL_backtrace_show+0x26) [0x14ac6c37cc4b]/opt/cray/pe/mpich/default/ofi/gnu/9.1/lib-abi-mpich/libmpi.so.12(+0x1ff3684) [0x14ac6bd2e684]/opt/cray/pe/mpich/default/ofi/gnu/9.1/lib-abi-mpich/libmpi.so.12(+0x2672775) [0x14ac6c3ad775]/opt/cray/pe/mpich/default/ofi/gnu/9.1/lib-abi-mpich/libmpi.so.12(+0x26ae1c1) [0x14ac6c3e91c1]/opt/cray/pe/mpich/default/ofi/gnu/9.1/lib-abi-mpich/libmpi.so.12(MPI_File_open+0x205) [0x14ac6c38e625] |

Currently it is unclear exactly what is causing this issue. Investigations are ongoing.

Workaround:

There is no workaround that does not require a change in the workflow. Either the container needs to be rebuilt to not make use of parallel IO libraries (e.g. the container was built using parallel HDF5) or if that is not possible, the software stack must be built “bare-metal” on Setonix itself (see How to Install Software).

Slurm on Setonix lacks NUMA support

Currently Slurm on Setonix does not support NUMA bindings. This means that there are number of NUMA sbatch/srun options that might not work properly and/or potentially harm the performance of applications. One specific example is that using srun‘s --cpu-bind=rank_ldom option significantly limits the performance of applications on Setonix assigning two threads on a single physical core. Once the issue is resolved it will be communicated promptly.

Changes to Singularity Modules on Setonix

Due to problems with the interaction of binded libraries for the Singularity module, it has been decided to make available several Singularity modules, each of them specifically suited to the kind of applications to be executed. The following singularity modules now exist on Setonix:

singularity/3.8.6-mpi: for MPI applicationssingularity/3.8.6-nompi: for applications not needing MPI communication, as most of the Bioinformatics toolssingularity/3.8.6-askap: specially set-up for ASKAP radioastronomy project (not relevant for most users)singularity/3.8.6: same as the MPI version. The use of this module should be avoided as it is going to be removed in the near future. Users should update their scripts and use one of the other modules above

For further information please check our updated documentation: Containers

10 March 2023

Key achievements this week

- Neo 4.4 upgraded to Neo 4.5.

- Slingshot integration tasks completed.

HPE will perform testing of the above works over the weekend in preparation to hand Setonix back to Pawsey by Monday morning.

Issues

- When HPE updated the slurm configuration to add the Phase 2 CPU and GPU nodes, they have wiped all the jobs sitting in the queue before Setonix was put into maintenance. Jobs will have to be resubmitted to the queue.

Activities planned for next week

- Planning for the installation of Neo 6.2 and CSM 1.3.1 on the Pawsey Test and Development System (TDS). This install will be used to verify the install time and possible researcher disruption associated with installing CSM 1.3.1.on Setonix (planned for May 2023).

Look Ahead

March & April 2023

- TDS Neo 6.2 update, and update to CSM 1.3.1 (no impact on researchers)

May 2023

- Setonix Neo 6.2 update, slingshot update and update to CSM 1.3.1 (This will require ~ 5 day researcher outage)

June 2023

- Setonix acceptance testing (This will require a number of short outages that will be planned and announced prior to commencing the testing.)

3 March 2023

Key achievements this week

- Switch configurations were upgraded in preparation for the integration of phase 1 and 2.

- Details for next weeks outage finalised:

- Neo 4.4 to be upgraded to Neo 4.5.

- Slingshot integration tasks to be completed.

Activities planned for next week

- A 7-day outage is planned for next week to undertake an update to the Neo software on /scratch and /software and the final slingshot integration tasks. This outage is scheduled to run from 7 March to 13 March.

Look Ahead

March & April 2023

- TDS Neo 6.2 update, and update to CSM 1.3.1 (no impact on researchers)

May 2023

- Setonix Neo 6.2 update, slingshot update and update to CSM 1.3.1 (This will require ~ 5 day researcher outage)

June 2023

- Setonix acceptance testing (This will require a number of short outages that will be planned and announced prior to commencing the testing.)

24 February 2023

Key achievements this week

- On Thursday 23 February, the slingshot network failed and required a complete restart. HPE and Pawsey worked overnight to restore service to researchers at 11am on Friday. HPE will be providing a root cause analysis to explain why the outage has happened and how to ensure it doesn’t happen in the future.

- HPE presented the software roadmap to Pawsey. This information provided will be used as input to the long term planning of updates for Setonix.

- Pawsey and HPE workshopped the schedule to integrate Setonix phases 1 & 2. The current plan will deliver the phase 2 CPU’s to researchers at the end of March 2023. The GPU’s will be tested by Pawsey prior to release to researchers and are predicted to be available to researchers late in April 2023.

- Pawsey and HPE reviewed the acceptance test plan and identified tests that will require a researcher outage on Setonix. These outages will be planned to minimise impact on researchers. Acceptance testing will not occur until May/June 2023.

Activities planned for next week

- The issue with the chiller that required Pawsey to cancel the planned outage this week has been managed and the researcher outage is re-scheduled for 28 February to 1 March to update the switch configurations used to connect the management plane in preparation for the integration of phase 1 and 2.

Look Ahead

- A further researcher outage is being planned for 6-11 March to update the Slingshot network in preparation for bringing the CPU nodes from Setonix Phase 2 into service.

- The following upgrades are currently forecast to occur in May 2023:

- The software stack upgrade

- The Slingshot network to be upgraded to 2.0.1

- /software and /scratch to be upgraded to NEO 6.2.

17 February 2023

Key achievements this week

- Documentation received from HPE detailing the works to be completed in the next researcher outage (Canu switch configuration change).

- 96 nodes returned to service following hardware replacement and stress testing to ensure their fitness for duty.

- 98 additional nodes were temporarily reallocated from the ASKAP partition to the general compute partition to help clear the queue backlog.

Activities planned for next week

- Pawsey and HPE will be reviewing the acceptance test plan to identify and plan any testing requiring a researcher outage on Setonix.

- HPE will be presenting the software roadmap to Pawsey. This information will assist with ongoing planning.

- Pawsey and HPE will be workshopping the schedule following the software roadmap presentation.

Look Ahead

- A researcher outage is being planned to update the Canu configurations on switches used to connect the management plane in preparation for the integration of phase 1 and 2.

- A further researcher outage is being planned to update the Slingshot network in preparation for bringing the CPU nodes from Setonix Phase 2 into service.

- An upgrade to the software stack will be scheduled in coming weeks.

- An upgrade to Slingshot 2.0.1 will be scheduled in coming weeks.

- And upgrade of /software and /scratch to NEO 6.2 will be scheduled in coming weeks.

25 January 2023

January maintenance

Setonix Phase 1 has been returned to service with data mover nodes temporarily repurposed as login nodes. Unfortunately, data mover nodes are based on AMD Rome architecture which is different from AMD Milan architecture used for all compute nodes and previous login nodes. As a result of this change researchers will notice two major differences.

Listing modules

module avail command on current login nodes will ONLY return all previously available modules on the data mover nodes. This is not reflective of modules available on compute nodes. To list modules available on compute nodes, invoke an interactive session on compute nodes with the following commands:

srun -n 1 --export=HOME,PATH --pty /bin/bash -i

module avail

The entire software stack is still available with the same components and versions as before the maintenance.

Compilation of software

Compilation of software should be performed in an interactive session on compute nodes initiated with the following command:

srun -n 1 --export=HOME,PATH --pty /bin/bash -i

Batch scripts submitted to compute nodes should not be affected.

Jobs submitted to copy partition should not be affected.

We apologise for the inconvenience. We will provide an update when the above issues are resolved. Please contact us at help@pawsey.org.au![]() in case of any further questions.

in case of any further questions.

12 December 2022

December maintenance

The December maintenance that would have happened on the first Tuesday of the month has been rescheduled for the 15th to better align with the works for Setonix Phase 2 hardware installation.

Setonix Phase 2

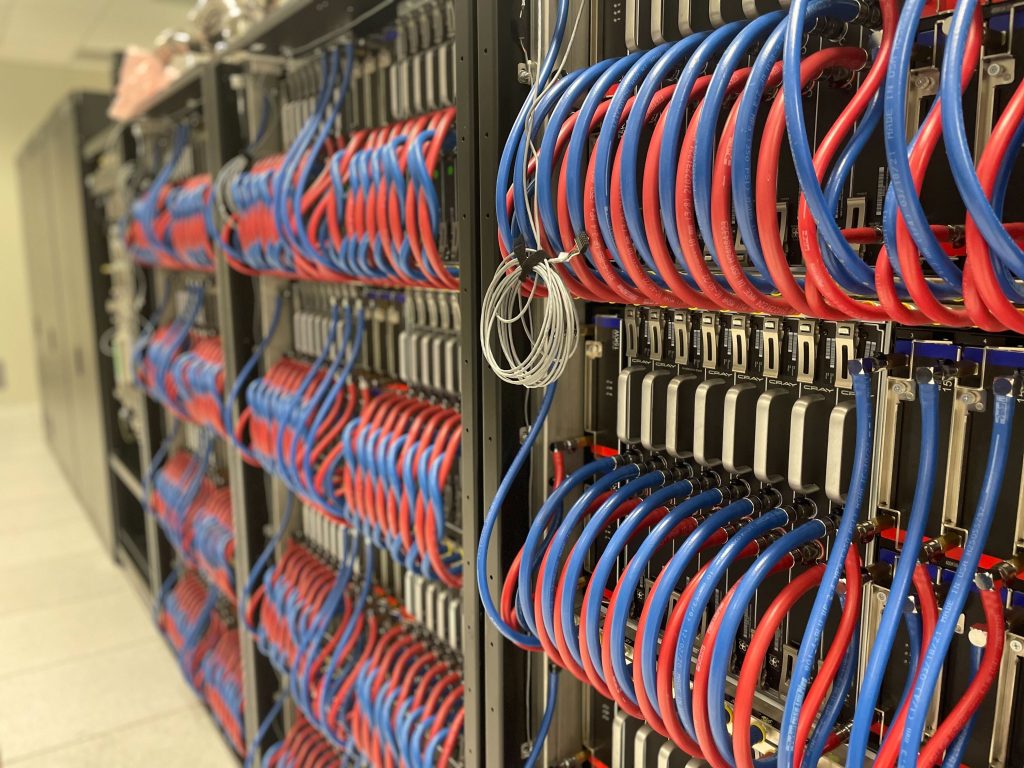

The process of installing Phase 2 of Setonix is progressing, technicians are assembling compute cabinets and the cooling mechanism, among other complex systems. The picture below shows the GPU cabinets without their back doors, with a very dense concentration of compute nodes and cooling pipes, while they were being installed. Soon after the maintenance, researchers will have access to the CPU partition within the new Phase 2 system and the CPU nodes of Phase 1 will be taken offline to upgrade their network cards to Cassini. Phase 2 GPU nodes will be available early next year after acceptance testing is completed in January. The page for the upcoming maintenance is M-2022-12-15-SC

Topaz and other Pawsey systems

Topaz continues to be available for migration purposes to support codes that are not yet supported on AMD MI250 GPUs of Setonix Phase 2 compute nodes. The main focus of Pawsey’s Supercomputing Applications Team will be on providing application support on Setonix Phase 2 GPU partition. If you need particular software to be installed or upgraded to a newer version on Topaz please open a helpdesk ticket.

The old /scratch filesystem, the one that was mounted on Magnus and Zeus, and is currently available on Topaz, will be taken offline.

On Topaz, it will be replaced with a partition of /group; such repurposed section will still be seen as /scratch. Pawsey staff will take care of moving files to their new locations for Topaz researchers.

The /scratch space available on Topaz will be reduced to accomodate only Topaz and remote visualisation projects.

Garrawarla will see its /astro filesystem being taken down from mid December to early January as it’s being rebuilt to the latest version of Lustre and repurposed as a scratch filesystem.

Nimbus Control Plane migration

In contrast to most maintenance on Nimbus, Pawsey’s cloud computing infrastructure, during the upcoming maintenance period an API and dashboard outage will be required. This is to finalise the migration to a new control plane, a project which has been underway for some months and requires this outage for the last few components. We will be shifting the message queues, database servers, and load balancers during this outage. There is a range of benefits to finishing this project, including smoother major upgrades, more efficient operations, and a wide-ranging review of configuration settings that have changed on the interrelated services over the past five years of operation.

It is worth noting that existing instances on Nimbus will not be affected by this outage because there will be no major changes to compute nodes. You will still be able to use your instances, but you will not be able to create new ones or access the Nimbus dashboard.

Contact details

This a reminder to all users that it’s important to keep Pawsey informed of any institution, email, and mobile number changes you may have. You can do this at any time yourself by visiting the “My Account” section of the user portal (https://portal.pawsey.org.au/origin/portal/account) and submitting any changes. If you’ve moved institutions, you won’t be able to change the email address yourself, but simply drop a note to the helpdesk team (help@pawsey.org.au) who will make the changes for you.

If you’re a principal investigator (PI) or Administrator for any projects, please take the end-of-year opportunity to review your team membership, and let us know of any people who’ve left.

Known ongoing issues

Here are some important currently active known issues. To keep up to date with the ongoing Setonix issues please refer to Known Issues on Setonix.

MPI jobs

The libfabric library is still affecting MPI and selected multi-node jobs. Errors have been noted specifically with the following applications:

- Spurious memory access errors with selected multicore VASP runs on a single node

- Out of memory errors in selected multi-node NAMD runs.

- I/O errors with selected large runs of OpenFOAM.

- Nektar when it runs at a large scale.

A full resolution of these issues is expected with the completion of the Setonix Phase 2 installation in January.

Spack installations

If you ever installed software using Spack prior to the October/November downtime, you may be affected by a configuration issue such that new installations will end up in the old gcc/11.2.0 installation tree. Please visit the Known Issues on Setonix page to know more.

If you are affected and want to use the older user and project installation tree, read the previous issue of the Technical Newsletter where we demonstrate how to achieve that.

We are working on a resolution to this misconfiguration error. In the meantime, to avoid this happening to your future Spack installations you can safely remove the ~/.spack directory.

Resolved issues and incidents

There has been an incidence of hardware failure on worker nodes on 25-Nov-2022. This affected the filesystem service DVS and caused a large number of nodes to go out of service and induced failures in the SLURM scheduler. Jobs submitted with SLURM may have failed immediately or have been paused indefinitely. A fix and reboot of faulty nodes were completed on 30-Nov-2022.

There have also been issues observed related to Internal DNS failure. Partial disk failure led to intermittent failures in internal name resolution services. Several jobs ended with errors relating to getaddrinfo() or related library functions, for example:

srun: error: slurm_set_addr: Unable to resolve "nid001084-nmn" |

The issue was resolved on 25-Nov-2022.

23 November 2022

Changes in the HPE-Cray environment and the Slurm scheduler

Setonix software upgrade that was performed during September and October brought some changes to libraries and applications with respect to what was available since July on the new supercomputer.

- Setonix is now running sles15sp3 on top of the Linux kernel version 5.3.18. Several libraries are present in their newer versions as a consequence of this change.

- The default compiler is now

gcc/12.1.0, and the newcce/14.0.3is available too. - The

xpmemMPI-associated library is now correctly loaded by compiler modules. For some codes with much MPI communication, this may result in a 2x speedup. - The Slurm scheduler has been upgraded to 22.05.2. Slurm is now aware of L3 cache domains and is able to place processes and threads such that the L3 cache is shared across (index-) neighboring threads. Another important change is that

srunno longer inherits the--cpus-per-taskvalue fromsbatchandsalloc. This means you implicitly have to specify--cpus-per-task/-con yoursruncalls.

Changes in the Pawsey-provided systemwide software stack

The scientific software stack provided by Pawsey, comprising of applications such as VASP, CP2K and NAMD, as well as libraries like Boost and OpenBLAS, has been completely rebuilt because the OS upgrade changed libraries the previous software stack used. The older software stack is still present at its original location, but it is not added to the module system anymore. Here is a list of relevant changes:

- The compiler used for the new build is

gcc/12.1.0, as opposed togcc/11.2.0. - Several packages, like boost and casacore, were updated to the latest version.

- A few Python modules have the compatible Python version as part of their module name.

- Recipes for some packages were added and/or fixed. An example is AstroPy.

- Singularity module now comes in several flavours which mount different Pawsey filesystems at launch. These extra modules are

singularity/*-askapand-astro, which are tailored to radio-astronomy.

We suggest removing the ~/.spack folder because it may contain configurations from the old software stack that may cause errors when installing new packages.

Using your project and user’s previous software stack

By default you won’t be able to see modules of the software you installed before the downtime, both project-wide and user installations, nor use the previously installed Spack packages to build new software. In fact, as a consequence of the drastic changes in the system, we suggest recompiling any software that you installed on Setonix before the downtime. However, your pre-upgrade installations are still present and can be viewed in the module system by executing the command

module use /software/projects/$PAWSEY_PROJECT/setonix/modules/zen3/gcc/11.2.0

and/or

module use /software/projects/$PAWSEY_PROJECT/$USER/setonix/modules/zen3/gcc/11.2.0

then running the usual module avail.

Purge policy on /scratch

The purge policy on /scratch filesystems is temporarily paused to ease user experience with Pawsey supercomputers in this period of transition. The purge policy mandates that files residing onto /scratch that haven’t been used for at least 30 days will be deleted to keep the filesystem performant. We are planning to switch this functionality back on middle of January, 2023 at earliest. This is under the assumption that the filesystem will not get fully utilised before that date. If there are changes to the plan they will be communicated promptly.

Bugfixes and Improvements

The following is a concise list of major improvements and fixes that came with the latest Setonix upgrade.

- Slurm is now aware of L3 cache regions of the AMD Milan CPU and will place MPI ranks and OpenMP/Pthreads accordingly. This should improve performance.

- Singularity was unable to run multi-node jobs when Setonix came back online. This was fixed as of 14/11/2022.

- More data-mover nodes are now operational.

Known Issues

Investigations carried out on Setonix unveiled important issues that should be fixed in the near future, but that you know about immediately.

MPI

There are several issues with large MPI jobs that will be resolved with phase-2. Currently, these issues impact all multi-node jobs and are

- Poor performance for jobs involving point-to-point communication.

- Workaround: Add the following to batch scripts

# add output of the MPI setup, useful for debugging if requiredexport MPICH_ENV_DISPLAY=1export MPICH_OFI_VERBOSE=1# and start up connections at the beginning. Will add some time to initialisation of code.export MPICH_OFI_STARTUP_CONNECT=1

- Workaround: Add the following to batch scripts

- Jobs hang for asynchronous communication, where there are large differences between a send and a corresponding receive.

- Workaround: similar to above, the poor performance work around.

- Large jobs with asynchronous communication that have large memory footprint per node crash with OFI errors, bus errors or simply the node being killed.

- Workaround: reduce the memory footprint per node by change the resource request and spreading the job over more nodes.

- Large jobs with asynchronous point-to-point communication crash with OFI errors.

- Workaround: reduce the number of MPI ranks used, possible at the cost of underutilising a node. One can also turn off the start up connection, i.e.,

export MPICH_OFI_STARTUP_CONNECT=0, without having to reduce the number of MPI ranks at the cost of performance. However, this latter option is only viable if there are not large differences in time between send and subsequent receives, otherwise the job will hang.

- Workaround: reduce the number of MPI ranks used, possible at the cost of underutilising a node. One can also turn off the start up connection, i.e.,

Slurm

- There is a known bug in slurm related to memory requests, which will be fixed in the future with a patch. The amount of total memory on a node is incorrectly calculated when requesting more than 67 MPI processes per node with

--ntasks-per-node=67or more- Workaround: Provide the total memory required with

--mem=<desired_value>

- Workaround: Provide the total memory required with

- For shared node access, pinning of cpus to MPI processes or OpenMP threads will be poor.

- Workaround:

srunshould be run with-m block:block:block

- Workaround:

- Email notifications implemented through the

--email-typeand--email-useroptions of Slurm are currently not working. The issue will be investigated soon.

File quota on /software

To avoid the metadata servers of the /software filesystem being overwhelmed with too many inodes, Pawsey imposes a 100k quota on the number of files each user can have on said filesystem. However, we acknowledge the chosen limit may be too strict for some software such as weather models, large Git repositories, etc. We are working on a better solution to be rolled out very soon where (a higher) quota on inodes is applied to whole project directory rather than on a per-user basis. This is in addition to the quota on the disk space, measured in GB, occupied by the project, which will stay unchanged.

You can run the following command to check how many files you own on /software:

lfs quota -u $USER -h /software

If the high file count comes from a Conda environment that is mature and won’t see many changes you should consider installing it within a Singularity or Docker container. In this way, it will count as a single file. To know more about containers, check out the following documentation page: Containers.