Convolutional Neural Network for Classification of MWA Images

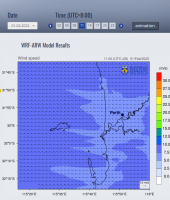

For the GLEAM-X survey, a survey of the southern sky at radio-frequencies, the MWA telescope, situated in Western Australia will produce several thousands of images. Not all of these images meet the quality standards to be directly incorporated for further processing. In order to identify the images that need to undergo further processing measures, deep convolutional neural network can classify the telescope images and identify faulty images from good ones. The code is written in Python, based on the PyTorch framework which optimizes deep learning through tensor computation.

Area of science

Astronomy, Radio Astronomy

Systems used

Zeus

Applications used

MWA modules, Container with Pytorch, other python librariesThe Challenge

Until now, researchers have been classifying a significant portion of images by hand. With the ever-growing amount of data, this will very soon no longer be feasible in a decent amount of time. The successful deployment of the above-mentioned algorithm significantly reduces time and cost efforts concerning the pre-processing of unknown datasets, which is especially useful for the amount of data expected to be produced with the SKA.

The Solution

Deep neural networks are known to approximate human performance when it comes to classifying or segmenting image data; nearly always at a faster rate, and very often with better accuracies. This feature shall also be taken advantage of for applications in radio-astronomy imaging.

The Outcome

In order to train neural networks, millions of parameters need to be computed and recomputed. Especially when dealing with images, many of these need to be accessed simultaneously. This requires high level hardware architectures, especially concerning GPU memory. Pawsey provides a wide variety of suitable options to be able to deploy deep learning algorithms.